How far are we from artificial general intelligence (AGI)? The experts weigh in...

Are we close? Or, will it be beyond our lifetime?

When historians look back at epoch-defining moments in the history of life, they may consider Spring 2022 as the inflection point in AI’s exponential rise. This AI Spring featured a continuous barrage of AI model releases such as DeepMind’s Gopher, Chinchilla, Gato, Google’s PaLM, Imagen, Minerva, and OpenAI’s DALL-E 2. These are just some of the mind-bending highlights.

But where are we on the march to artificial general intelligence (AGI)? How can one judge something that’s so far away, yet getting tantalizingly closer?

Let’s start with the definition of AGI—“the ability to accomplish any cognitive task at least as well as humans.” With this in hand, let’s come back to the question of where we are on the journey to AGI.

Peter Diamandis, a famous serial entrepreneur and founder of the XPrize, has described how he evaluates startup investment opportunities. Peter looks for the “knee bend” in the exponential curve graph.

An AGI graph as of right now may look something like this:

Are the incredible achievements of PaLM and Gato at the knee of the curve? Or, are we above or below the knee?

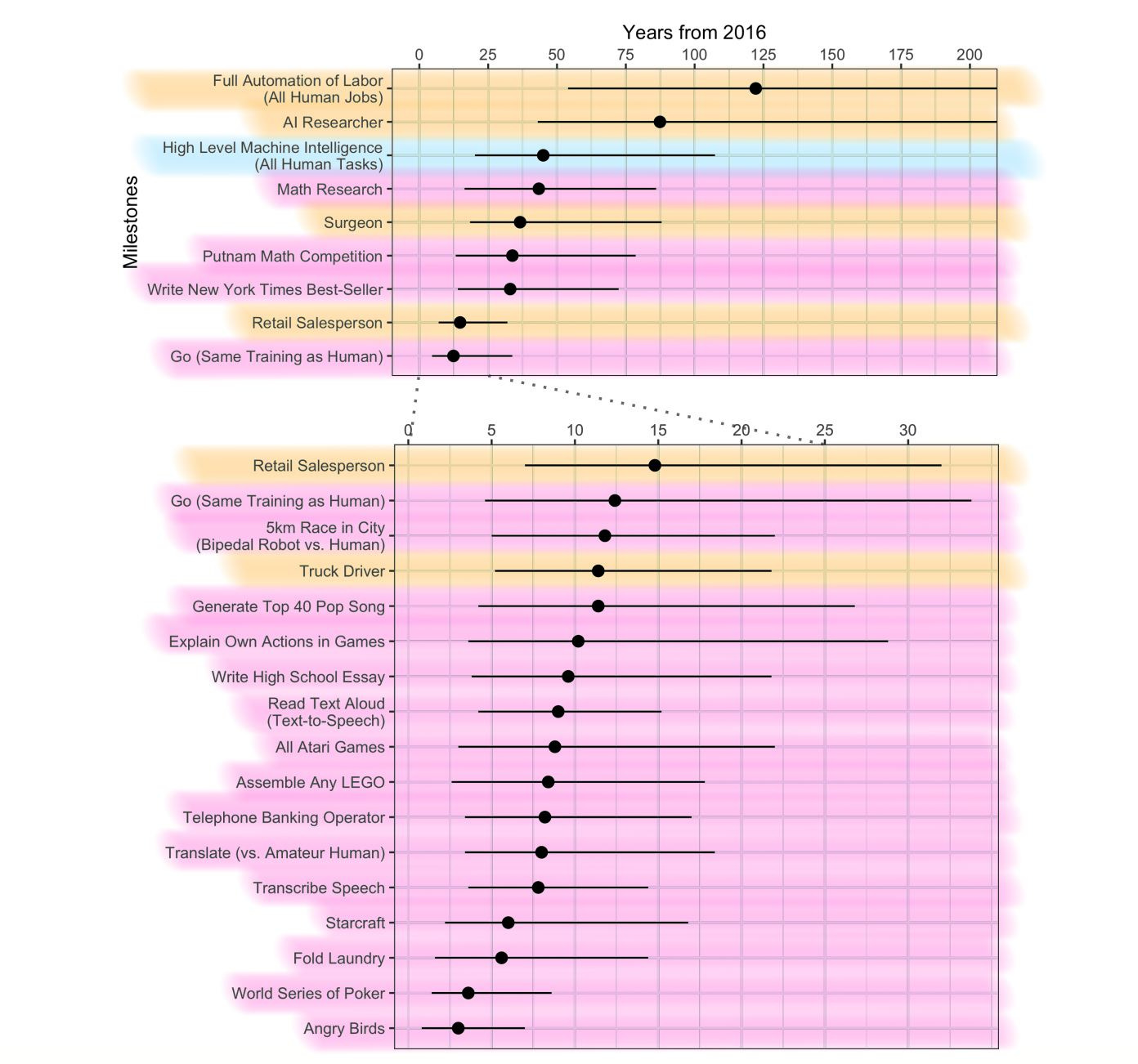

Fortunately, we can consult the AI experts on this. In 2016, a study was commissioned polling experts across the field of AI on their predictions on milestones ranging from an AI mastering Go, writing a novel, and achieving AGI.

The median timeframe for AGI was 2055, but had a wide range from 2030 to 2130.

However, AI models have already crushed timelines for various tasks. For example, in 2016, AI experts predicted that the game of Go would not be mastered until 2028. Deepmind’s AlphaGo famously beat one of the best Go players, Lee Sedol, in 2016—eight years earlier than predicted.

What about the harder game of Starcraft where humans reign supreme? Experts predicted human-level performance by 2022. DeepMind’s AlphaStar achieved Grandmaster status in 2019.

Writing a high school essay? Experts predicted that an AI could accomplish such a task by 2026. GPT-3 achieved this feat in 2020. Although GPT-3 essays could contain flaws, its performance is on par with your average American high schooler who might be achieving a C+ in English class.

However, 2016 is ages ago in AI research. Transformer AI models weren’t invented until 2017 and put into wider adoption years following. These models have made a transformative difference on AI performance.

So what do AI experts currently believe?

The leading futurist, Ray Kurzweil, is in a great position to weigh in on this matter. Of his 146 predictions since the 1990s, he boasts a 86% accuracy rate. More importantly, he has been a Director of AI at Google for the past 10 years; he lives and breathes AI.

Amazingly, he made a forecast for AGI in his 1999 book. The year of his prediction: 2029.

Ray recently he spoke to a small group of Singularity University alumni about his timeline saying, “I think we will actually beat 2029”. He also mentioned how the large language model progress has surprised even him. Ray concluded by stating that no big breakthroughs are needed to get to AGI.

What about the man of not-so-few-tweets? Elon Musk recently said he would be surprised if we don’t have AGI by 2029 (tweet from 5/30/22).

Demis Hassabis, founder of DeepMind, said AGI is on the horizon and that no architectural breakthroughs are required, just more engineering and data in his interview with Lex Fridman in June 2022.

Demis believes AGI will occur in the next decade or two (2032-2042). Compare that to his prediction at a conference in 2018, where he believed AGI was several decades away (2048-beyond). Thus, in 4 years he pared down his estimate by 16 years.

Another co-founder of DeepMind, Shane Legg, says he still thinks AGI is 50/50 by 2030.

After the release of Deepmind’s Gato model, their Research Director Nando de Freitas tweeted the controversial statement that “It’s all about scale now! The Game is Over! It’s about making these models bigger, safer, compute efficient, faster at sampling, smarter memory, more modalities, innovative data, on/offline.” Nando implies that no major breakthroughs are needed and that AI is on the horizon, in line with sentiments from Ray Kurzweil and Demis Hassabis.

Eric Schmidt, former Chairman and CEO of Google, said in a Fall 2021 interview that AGI will occur “in the next decade.”

The convergence of these experts hovers around 2029. I would bet the broader AI expert community has a slightly more conservative outlook. If the large survey was to be repeated it would converge to a ~2035 date. That is twenty years earlier than their previous prediction of 2055.

In summary, we are at the knee of the curve and AGI seems more than likely to occur by 2030. We will know if we are at the knee of the exponential curve if achievements in 2023 make the achievements in 2022 seem trivial.

If AGI is less than ten years away, what are the implications for society? What does this mean for education, healthcare, employment, and other fields? What are the investment opportunities?

I’ll cover these questions in future posts. Please subscribe if you would like more about AI investing and developments. See you in the future!

If you loved this post, please share it with 5 of your friends and see what they think.

💁🏻♂️ Disclaimer: All content on this Substack is for discussion and illustrative purposes only and should not be construed as professional financial advice or recommendation to buy or sell any securities. Should you need such advice, consult a licensed financial or tax advisor. All views expressed are personal opinion as of date of publication and are subject to change without responsibility to update views. No guarantee is given regarding the accuracy of information on this Substack. Neither author or guests can be held responsible for any direct or incidental loss incurred by applying any of the information offered.

Do you imagine an AGI as being able to do something like this (without any prior knowledge or explicitly coded support):

- Be taught the rules of chess verbally with a small number of visually demonstrated examples

- Play a handful of games

- Explain the rules correctly to someone else, or another instance of itself, in a similar way

This seems like it would fall under "any cognitive task" but feels much farther off than 2029 to me. If I had to bet I'd say it wasn't even achievable without being explicitly coded for at some level.

Do you expect a radical transformation of the civilization after the development of AGI? Like massive automation or space colonization or something of that kind?