Every once in a while a revolutionary technology comes along that changes everything. Electricity. The internet. Smartphones.

While entrepreneurs are focused on Deep Learning applications, futurists are focused on artificial general intelligence (AGI). But literally, no one is talking about the era that’s sandwiched between them with incredible potential…Until now.

In the early days of the AI boom, I had a front-row seat to the future as a financial analyst for the core GPU business unit at NVIDIA. I watched their business transform as David outsmarted Intel’s Goliath—a company 30x its size! NVIDIA initially started out manufacturing graphics cards in the mid-90s, and only in the last ~15 years realized that graphics cards (GPUs) are essential for Deep Learning.

In 2007, NVIDIA released CUDA, a software application interface that would change computing forever. CUDA enabled parallel processing of tasks with GPUs offering as much as a 100x speed increase over CPUs. Parallel computing has been crucial for complex tasks like nuclear physics, drug discovery, and supercomputing. CUDA was free to use, but could only be deployed on NVIDIA hardware. Similarly, Apple created its own tech ecosystem where their iOS platform could only be accessed with their market-leading products.

At that time, I was already a futurist-thinker and felt discouraged by NVIDIA’s inability to capture the opportunity. They could create value with their GPUs, but their market adoption was virtually zero. What was the deal?

Timing. Deep Learning’s breakout year wasn’t until 2012. That year, AI researchers at the University of Toronto built AlexNet—a neural network that could identify objects, like cat faces and human bodies, with a stunning 85% accuracy. This was dramatically better than anything else before it. The key to their success?

They used NVIDIA GPUs. Little did they know this would be the catalyst that enabled Deep Learning to follow a predictable course: up the S-curve.

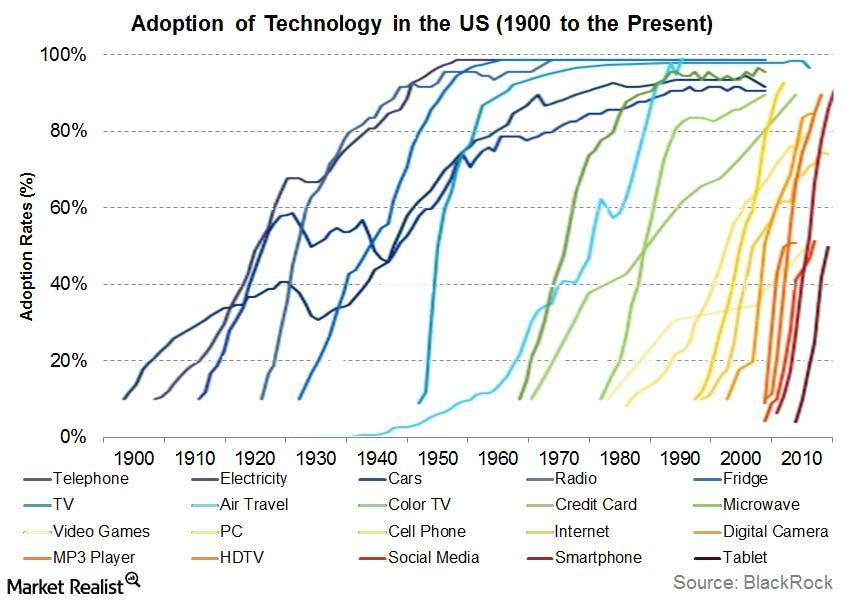

Technology Adoption Curves (S-curves)

S-curves depict society’s adoption of a product and they’ve been around since 1900! At first the tail of the “S” builds slowly with the first wave of early adopters, but then all of a sudden the product takes off growing exponentially. It then tapers off as it reaches market saturation. Notice how the S-curves become increasingly vertical with each successive decade. In modern society, new technologies are adopted at lightning speeds as compared to their 20th-century peers. Prior technologies build upon each other increasing their speed of adoption.

The AI Eras

The first commercial AI era was Deep Learning. Since taking off in 2012, it has continued to dominate the field. We all interface with Deep Learning hundreds of times everyday—we just don’t know it. For example, when we speak to Alexa, search for something on Google, or see a recommended video on YouTube, we’re interacting with AI algorithms and improving their ability to serve up what we want in the future.

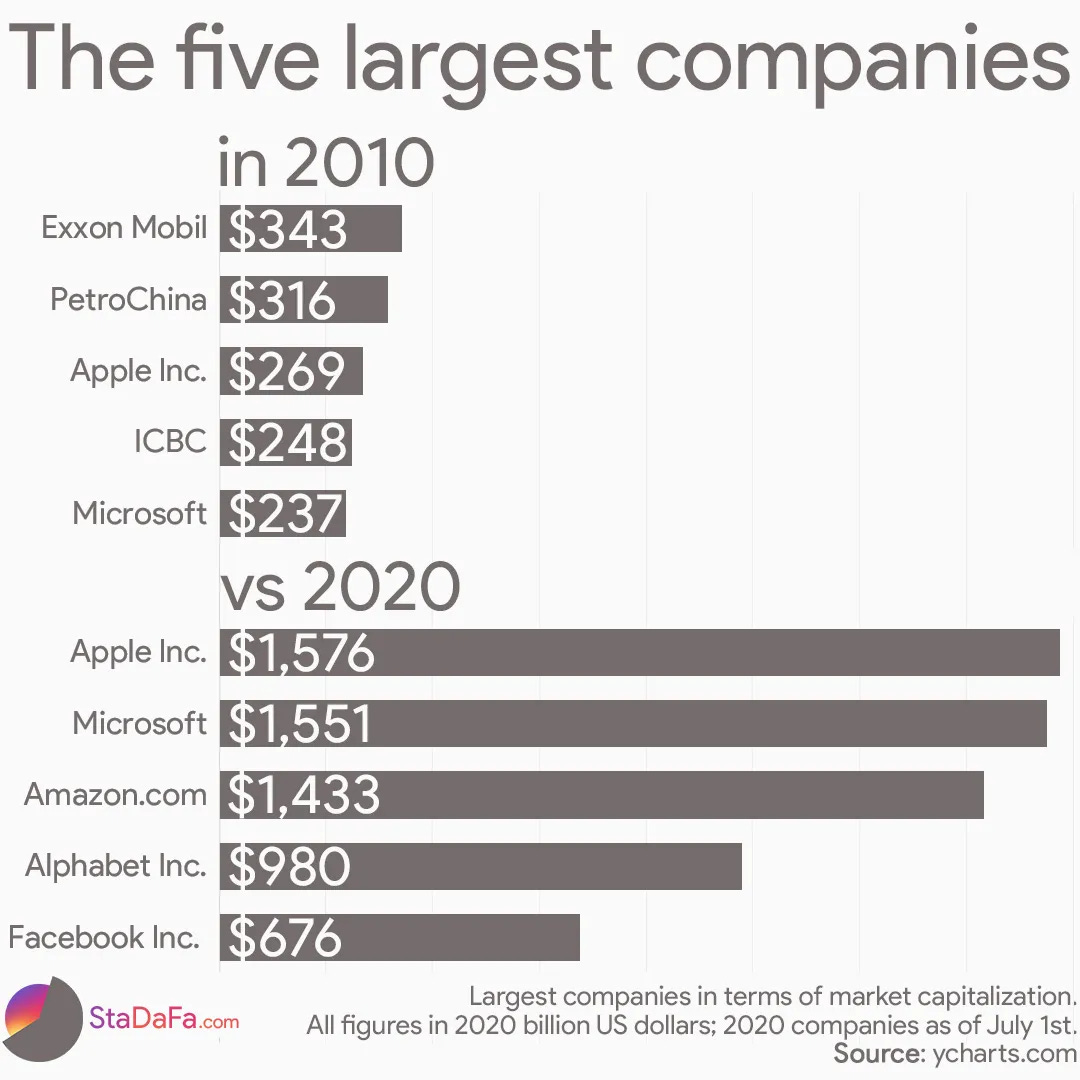

Much of the market capitalization gains by big-tech between 2010 and 2020 can be directly attributed to Deep Learning. It has substantially improved all their core products and their relevancy to you as the paying customer.

Ten years later in 2022, we’re a large part of the way up the S-curve (or adoption) of Deep Learning. It might look something like this:

On the opposite end of the AI timeline lies AGI. Whether it happens by 2030 (like many experts predict) or 2060, it’s the mother of all technology markets. We can’t even begin to imagine what a super-intelligence will create in the economy.

If you bring the Deep Learning and AGI adoption curves together in one graph, you get the most prevalent view of the AI industry. (Note: The AGI curve really should be off the charts here because it is exponentially bigger, but I shrunk it for simplicity.).

However, the most commonly held view of the industry completely neglects a middle era—which is both imminent and incredibly significant in its own right.

The Missing Era: The AI-job Suite

In my prior post, I described this AI era and introduced the AI-job suite. To recap, AI-job suites are the automation of creative jobs as a service. A business will be able to select which jobs they want to “employ” as part of the suite e.g. graphic designer, software developer, writer, etc. This era sits sandwiched between the two predominant era because it builds off of the Deep Learning era, yet is still far from AGI.

Each AI era builds on the previous one, and thus each one increases substantially in size.

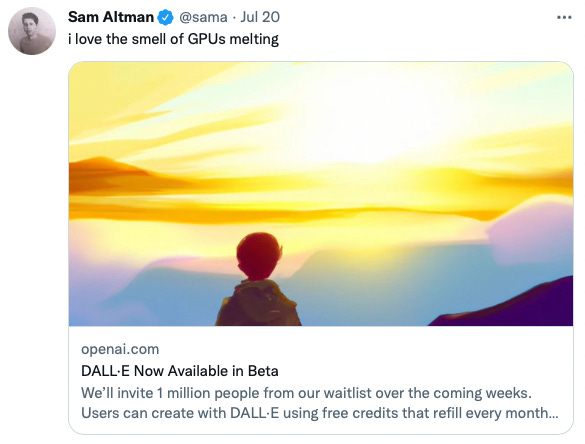

This era is already beginning. Just last week OpenAI announced the commercialization of DALL·E 2. In making the announcement, CEO Sam Altman, fanned the flames by saying:

Remember NVIDIA–the king of GPUs over the last 30 years? OpenAI uses boatloads of them. Basically, he’s saying that his company and others are going to need an endless supply of GPUs over the coming years as the human labor force starts transitioning into an AI workforce.

Who wins?

What has become strikingly clear is a green field for AI tech–at least until other big-tech companies catch wind of this opportunity. Apple, Microsoft, Amazon, Alphabet, and Meta are a few of those that benefitted the most over the last decade (see chart below).

But, the primary winners going forward will be the companies with an overt purpose to solve AI automation and/or AGI (e.g. Alphabet, OpenAI, Tesla, Numenta, and others). Secondary winners will be those who supply/create hardware chips for the above software companies (e.g. NVIDIA, AMD, Intel, Graphcore, and Alphabet).

The companies working publicly on AGI are also working on products directly addressing the AI-job suite era. The best known ones were created by OpenAI and Alphabet (Google and DeepMind). In this way, these companies are effectively capping their downside in the case that AGI doesn’t come to fruition for decades or ever.

Interestingly, Alphabet is also one of the leaders in Deep Learning. They make their own AI hardware chips, but because of their own immense demand for GPUs they still need to supplement with NVIDIA chips. Alphabet is the only software company that is publicly working through all three eras—stay tuned for future posts on this!

The next era

Just like 2012 was the breakout year for Deep Learning, I believe 2022 will be the breakout year for the AI-job suite. While CUDA was the catalyst for deep learning, large AI models have lit the match to usher in a new era of AI.

The AI-job suite market presents an imminent opportunity larger than Deep Learning. Companies stuck in the Deep Learning era will get left behind as the AI-job suite and AGI eras rise to dominate. Most people are completely unaware of any of these eras.

I hope you can now see a new way to look at the future. I started this Substack to help you become more aware of how AI could impact your job or business, your investments, and your life. If you enjoyed reading this post, please subscribe below.

Up next on AI Future: We’ll look at a recent biomedical breakthrough made possible because of the convergence of different technologies, including AI. See you in the future!

If you enjoyed reading this post, share it with 5 of your friends and see what they think.

💁🏻♂️ Disclaimer: All content on this Substack is for discussion and illustrative purposes only and should not be construed as professional financial advice or recommendation to buy or sell any securities. Should you need such advice, consult a licensed financial or tax advisor. All views expressed are personal opinion as of date of publication and are subject to change without responsibility to update views. No guarantee is given regarding the accuracy of information on this Substack. Neither author or guests can be held responsible for any direct or incidental loss incurred by applying any of the information offered.

Share this post